I’m standing on the shoulders of giants this week – today, it is Ryan Revord.

Introduction

The COVID-19 outbreak lies at the heart of this blog post. Recently, we were asked to scale up a Citrix deployment, shall we say, rather heavily, and indeed, rather rapidly. When you’re talking about adding three-figure percentages to your workloads, you need to plan for an unprecedented capacity increase.

First port of call was Citrix infrastructure, which all looked good. Next we looked at network capacity – again, plenty of headroom for the expected increase. And then we came to storage – and oh boy, the numbers looked heavy.

Storage issues

The environment in question was already using FSLogix Profile Containers. The main thought was – how big do we possibly expect the profile to get for each user? This is a very important consideration in every Profile Containers deployment, and it’s really impossible to tell without sending users into the environment to test it. There are of course maintenance routines to consider – shrinking, compacting, pruning, and (dare I say it?) exclusions – but this is all a matter for another post (coming soon!) In this environment, nothing had yet been put into place for maintenance purposes, so we had to have a look at existing users and try and take an educated guess at how much storage we potentially required for each user.

Most profiles were in the 5-10GB mark (Teams, it would appear, throws out 4.6GB of data every time it is run for the first time, although this might have been down to an erroneous Chocolatey package), but we had some outliers around the 20GB mark, and a very small number slightly above this. However, this didn’t take into account OneDrive data, which was also scheduled to be synchronised into the profile VHD. Looking at OneDrive usage gave us an average of around 8-10GB – so being cautious, we anticipated looking at 30-40GB per user.

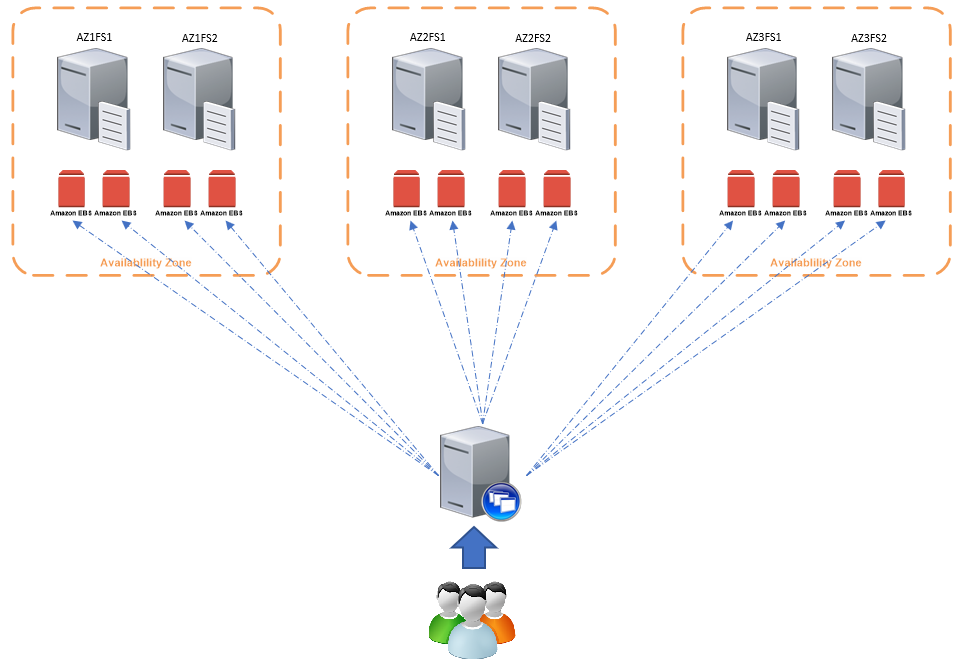

When you’re talking about tens of thousands of users, this number gets very big, very quickly. Storage wasn’t an issue (this was a cloud-based deployment), but the volumes we could attach to the file servers were limited to 16TB each, and therefore we needed quite a sizeable amount of these volumes. There were cloud services we could have leaned towards to accommodate this, but they had not yet been cleared for usage within the environment, and were unlikely to anytime soon.

You could stand up Scale Out File Services clusters and essentially combine all these volumes into a SAN-like pool (Leee Jefferies has done some great stuff on this), but again, this wasn’t an option because it would involve architectural changes

The natural response to this problem is usually to front some DFS onto multiple shares, but several reasons prevented this – a) I hate DFS, b) there were authentication issues between the various domains in use and DFS would have exacerbated this, and c) directing users to DFS file shares seemed no more intelligent than simply directing them to a list of Windows file shares. The main problem we had was – what would happen when the first file share filled up? How would we direct users to the next one instead? The only way that it seemed possible to do this would be to use some sort of variable to direct subsets of users to particular file shares – but if something happened and one file share suddenly started using substantially more capacity than the others, we’d have to intervene and direct new users somewhere else.

It’s at this sort of time that you normally turn to the community to get a different perspective, and that’s where Ryan stepped in with a suggestion.

Powershell to the rescue!

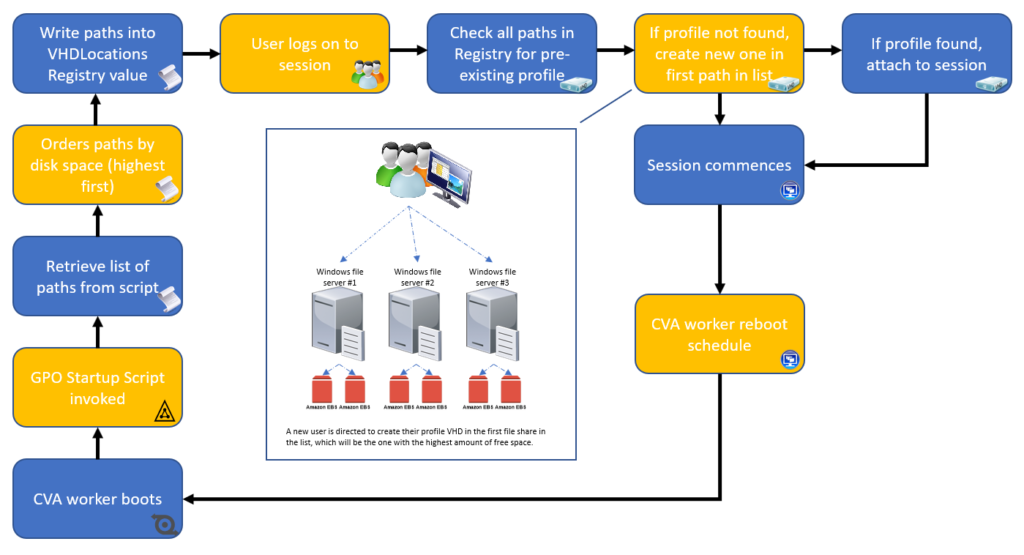

Ryan had experienced a similar problem, and his response was simply to use a PowerShell startup script to iterate through a list of file servers and order them by their free disk space. This value would then be written to the FSLogix Registry value for VHDLocations. So technically, new users would *always* hit the file share with the most available space. Essentially, as long as the script was run often enough for the volume of new user onboarding, the profiles would be load balanced across the file shares. Here’s a diagram spelling out the process:-

When a user logs on, FSLogix iterates through the entire list of VHDLocations searching for an existing profile. If it finds one, fine. If it doesn’t find one, though, it is created in the first entry in the list – which would be the file share with the most available space.

For our environment, we were potentially onboarding thousands of users a day, so we had to run this as a Scheduled Task rather than a Startup Script. However, as long as the Scheduled Task was run with admin access (so it could write an HKLM value), this worked fine. Also, it is worth noting that the script (below) uses a Z: drive to query the free space – so be careful if you’re running it manually to make sure you don’t have a Z: drive mapped anywhere.

Permissions-wise, you need to make sure that you give Domain Computers (or at the very least the Citrix worker computer accounts) RX access to the root of the share so that you can determine free space. If running as a Scheduled Task, obviously this also needs to include the user the task is configured to run as.

Here’s the script – all that is needed is for you to populate the list of file share paths with your own, and it is ready to go! It populates both the Profile Containers and ODFC Registry values for VHDLocations, but if you don’t use both, it won’t make any difference – the unused one is just ignored. Big kudos to Ryan for his hard work on this!

######################################

##### Begin profile path ordering ####

######################################

$test=@()

$orderedShares=@()

$gb=(1024 * 1024 * 1024)

$tb=(1024 * 1024 * 1024 *1024)

# Share Array for stage values.....populate this array with all of the storage paths that can be addressed in the environment

$ProfileShares=@(

"\\server1\share1"

"\\server1\share2"

"\\server2\share1"

"\\server2\share2"

"\\server3\share1"

"\\server3\share2"

"\\server4\share1"

"\\server4\share2"

"\\server5\share1"

"\\server5\share2"

"\\server6\share1"

"\\server6\share2"

)

$test=@()

foreach ($share in $profileShares) {

$nwobj=new-object -comobject WScript.Network

$status=$nwobj.mapnetworkdrive("Z:",$share)

$drive=get-psdrive Z

$blah = [math]::Round($Drive.free / $gb)

$shareSpace = New-Object -TypeName psobject

$sharespace | Add-Member -membertype NoteProperty -Name Share -value $share

$sharespace | Add-Member -membertype NoteProperty -Name freespace -value $blah

$test+=$shareSpace

# remove network driveexcel

$status=$nwobj.removenetworkdrive("Z:")

}

$test2 = $test | Sort-Object -Descending freespace | select share

foreach ($item in $test2) {

$orderedShares += $item.Share.ToString()

}

# set FSlogix share path:

# cleanup

$FSLogixProfilePath="HKLM:\software\FSLogix\Profiles"

$FSLogixODFCPath="HKLM:\SOFTWARE\Policies\FSLogix\ODFC"

$FSLogixKeyName="VHDLocations"

if ((get-item -path $FSLogixProfilePath).GetValue($FSLogixKeyName) -ne $null) {

Remove-itemProperty -path $FSLogixProfilePath -Name $FSLogixKeyName -force

} else {

# do nothing, no key to delete

}

if ((get-item -path $FSLogixODFCPath).GetValue($FSLogixKeyName) -ne $null) {

Remove-itemProperty -path $FSLogixODFCPath -Name $FSLogixKeyName -force

} else {

# do nothing, no key to delete

}

# profile path

New-ItemProperty $FSLogixProfilePath -Name $FSLogixKeyName -Value $orderedShares -PropertyType MultiString -Force

# ODFC Path

New-ItemProperty $FSLogixODFCPath -Name $FSLogixKeyName -Value $orderedShares -PropertyType MultiString -Force

#debug values, show me sizes

New-ItemProperty $FSLogixProfilePath -Name "scriptDebug" -Value $test -PropertyType MultiString -Force

New-ItemProperty $FSLogixODFCPath -Name "scriptdebug" -Value $test -PropertyType MultiString -Force

######################################

##### END profile path ordering ######

######################################

The script should be run as required – Startup Script would be fine if your reboot schedule means that the amount of users you are onboarding in between reboots doesn’t potentially exceed the capacity of a single file share, otherwise run on a Scheduled Task. We have been running it every hour, as we are seeing up to two thousand users per day being onboarded (and our Citrix workers are never rebooted anyway).

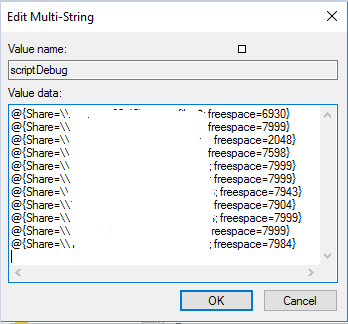

The script also writes a handy extra value to the Registry key called scriptDebug which shows the disk space of all the target file shares last time it was run – really handy

So with this being run, our users are directed to any one of (in this particular case) twelve file shares of 16TB each. This can simply be added to by provisioning additional file servers and volumes and adding them to the list in the script, so their new capacity will be instantly utilized.

Resiliency

Of course, this doesn’t provide resiliency. There was no requirement here to provide resiliency, merely the capacity to absorb an unprecedented and exceptional uplift of user numbers. There is resiliency in terms of absorbing the loss of an Availability Zone, but only in terms of users being able to log on – the users in the AZ (or on that server, or file share) will still lose their profiles and have a new one created elsewhere.

If you do need resiliency for the profiles themselves then there are lots of options here (will hopefully cover some of them off in my upcoming blog post about FSLogix best practices). In this situation it simply wasn’t required, because the business didn’t want to have to pay the cost of the extra storage (doubling it on top of an inital 170TB+ increase can be a bitter pill to swallow). Besides, with OneDrive and Known Folder Move (blog post coming on this too!), it was felt that users would simply resync their data, Teams cache and Outlook cache and then put back any other minor customizations at their leisure. Not ideal for people like myself who like to provide a seamless, smooth roaming experience – but this was a risk that they chose to absorb.

Summary

So, Ryan has provided us with a nice simple way to spread users across multiple file shares in a quick and easy fashion, and to address the issue of each file share potentially filling up.

A lot of people will say “why not just use Cloud Cache”, but there are a number of reasons around this. Firstly, Cloud Cache replicates profiles rather than distributing them and we were primarily looking to split the load across the file shares rather than provide redundancy. Second is that in the past Cloud Cache has been very buggy and it is only in more recent releases that it has improved, so I was loth to hang a production environment on it based on past experience. It is also unclear how Cloud Cache deals with a file share being at capacity – as far as I know it looks for availability only, although I am open to being educated if I am mistaken. Also, local cache potentially could have given us a 300GB storage increase for each server that was deployed, and that again would be a cost implication that would be unpalatable.

For our purposes this has worked very well, however there are a couple of points to be aware of.

Firstly, if someone expands their profile massively they could still potentially fill the file share. We have set profiles to a limit of 100GB but obviously if many users suddenly underwent a huge increase we might have issues. We have to monitor the file shares carefully to keep an eye out for sudden profile size increases and potentially then prune and shrink them (Aaron Parker has some good articles on this, will also touch on it in an upcoming post).

Secondly, finding a user’s profile when they are one of a large number of file shares is a bit annoying and takes quite a while! To this end we are going to write an environment variable into the user profile with the name of the configured file share and display it using BGInfo to save us from this problem.

However, I have to say, that for use cases like ours, this has been a really good method to use. Hopefully some more of you out there may benefit from it, and huge round of applause due to Ryan Revord for developing this and sharing it – as I’ve said many times before, community rocks!

1,798 total views, 14 views today